With the projection surface (mostly) complete, I moved on to some projection tests and started working on alignment. As I mentioned in a previous post I’m using the openNI in Processing to track users via kinect, but I’ve modified the library to track an average point of multiple users. Based on this I’ve aligned the different tracked areas to the physical space, so each region maps to a series of servos (a column right now, and there are 8 columns, but more fidelity to come).

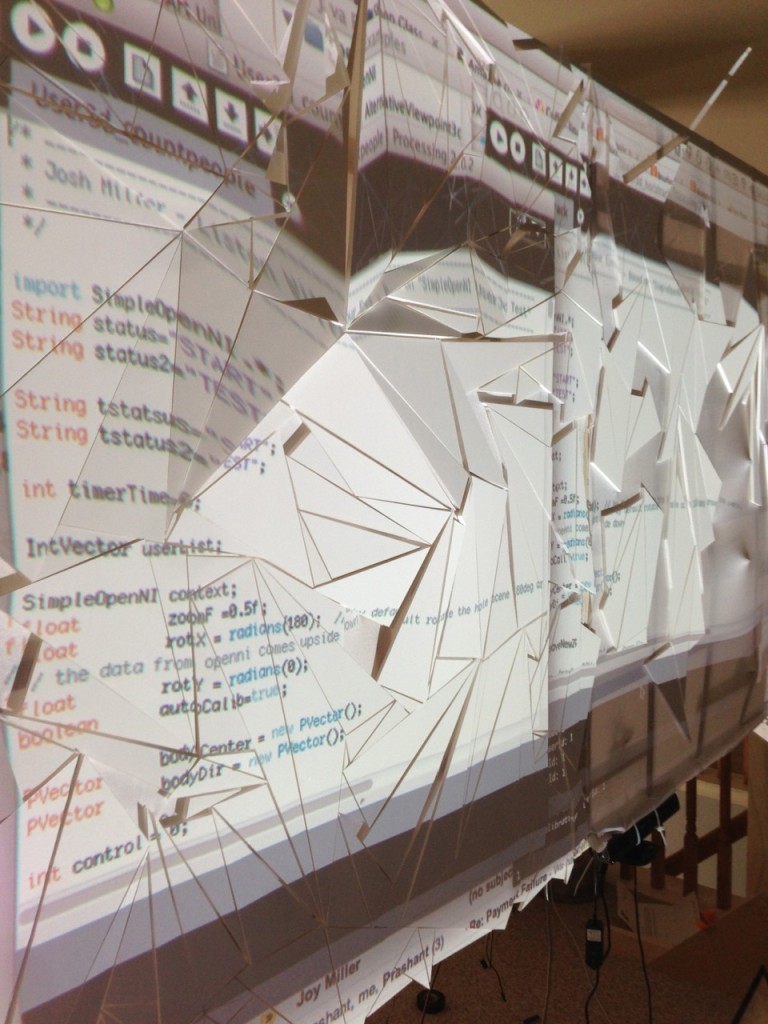

The projector is bright and provides high enough resolution to map properly (that’s a future addition), here’s a shot of the screen with my processing code on top.

First Videos of alignment tests:

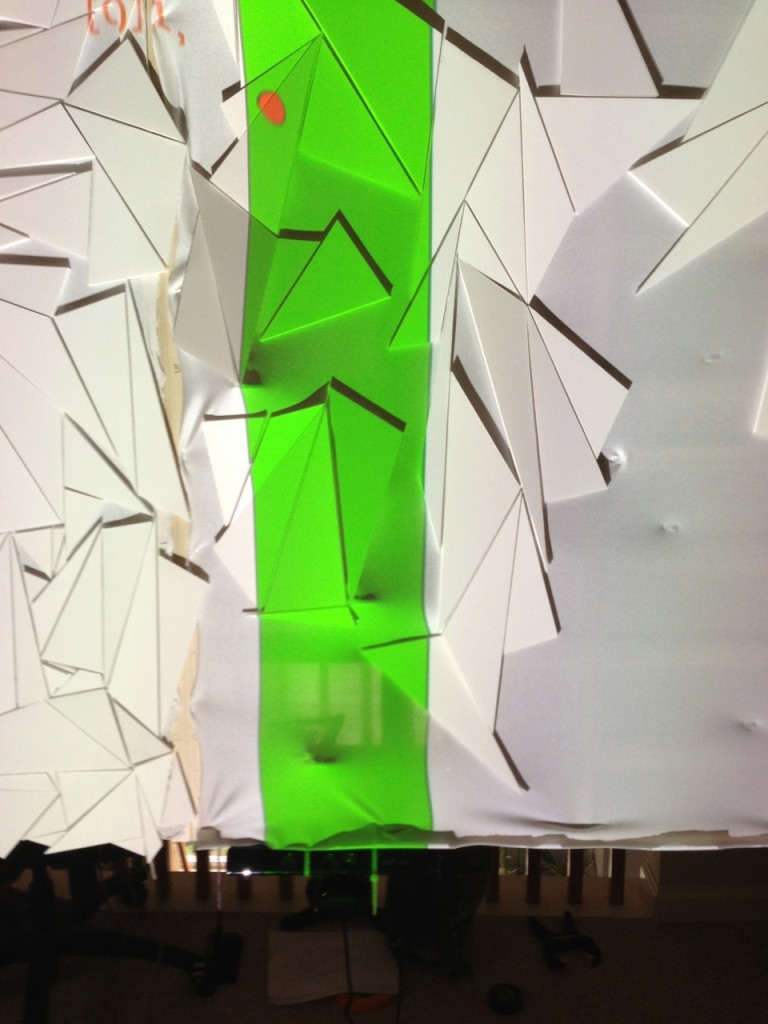

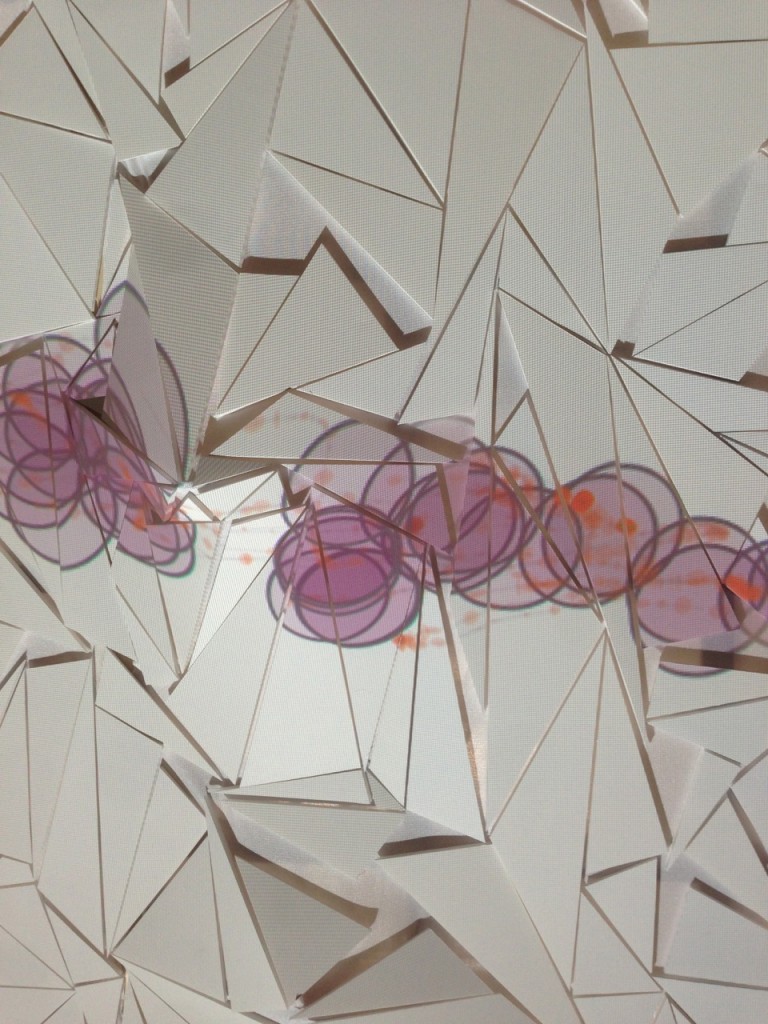

I’m now working on the visuals for the piece. The project visuals will not be finalized in time for the 2013 Biennial Faculty Exhibition at Kuztown, but I have some basic visualizations working so I can exhibit the piece in the show. I anticipate evolving the visuals over the month that the piece is displayed, and working on the kinks of the display/coding over the course of the show. This should lead to a more polished / functional final project when it’s completed later this fall. A projection visualization is show below, and a shot from behind the installation, which is perhaps more beautiful than the projection itself.

:

: